It began with morality, and now it ends with leaks and deletion commands. Two phenomena are currently showing how conservative digital culture is shifting toward control and denunciation: moral self-surveillance in the form of apps like Covenant Eyes and instrumentalized civic technology in the form of “Cancel the Hate.” On paper, both promise purity — one private chastity, the other public moral policing. In practice, they produce rituals of control, reputational risks and, in the worst case, tangible dangers.

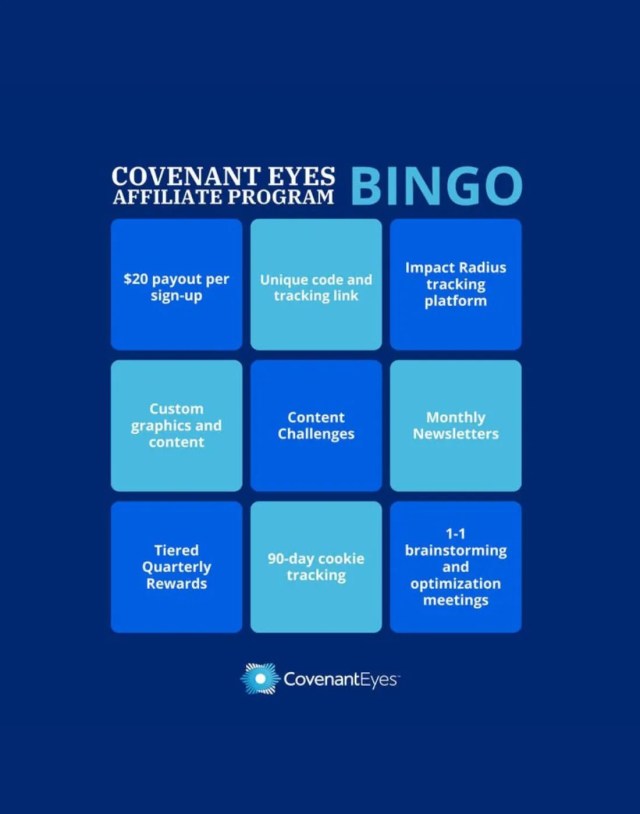

Covenant Eyes markets itself as the digital guardian of the family: accountability software that logs browsing behavior and forwards it to “trusted partners.” In conservative evangelical circles the tool is often praised as a moral aid. In a widely circulated interview, the Speaker of the United States House of Representatives, Mike Johnson, described how he maintains an “accountability partnership” with his son — father and son watching each other’s internet use. Seen soberly, this image is more than a private arrangement: it reveals the cultural premise of a movement that privatizes and politically legitimizes control. Charlie Kirk has energetically promoted Covenant Eyes; in interviews and on a Covenant Eyes podcast he praised the software and explicitly urged “good Christians” to use it as a means of digital self-control.

This is not harmless digital asceticism. This is the private translation of state morality into the family, certified by platforms and on the path to everyday practice. Covenant Eyes thus becomes an inquisition in the pocket: not the church, not the state — but the father as overseer, enforcing his authority through screenshots, automatic alerts, and “trusted” notifications. The liberal rhetoric of freedom shrinks here to the demand “less state, more father.” In a society where personal sexuality has long become politicized, this is not merely ironic — it is dangerous.

Mike Johnson, Speaker of the United States House of Representatives, vows for the digital virginity of the West

In parallel, another group constructed a different form of “purity guarding”: Cancel the Hate, an app that launched after the assassination attempt on Charlie Kirk (September 10), claiming to hold “individuals accountable for their public words.” Its target groups are explicitly exposed professional groups — doctors, teachers, public officials — people whose professional integrity and privacy are especially sensitive. The app was supposed to create transparency; in truth it produced security gaps.

The site presents itself as a moral authority, the clean hand of public debate — in reality it serves one purpose: to generate and consolidate hate. Under the cover of “verification” and “transparency” it accumulates accusations against exposed professional groups, mobilizes outrage and supplies material that is then recycled in channels of shitstorms and character assassinations. Even if the terms of service appeal to responsibility, the practices show the opposite: targeted exposure instead of clarification, instrumentalization instead of protection. In short: what is sold as civic courage is in truth an amplifier for fear, mistrust and exclusion.

Our investigation documents the scenario: the app was put online quickly, encountered a high volume of reports — the founder claimed 38,000 submissions within 30 hours — and soon fell apart technically. Our research uncovered a vulnerability that exposed users’ email addresses and phone numbers. To the eager distributors in AfD Telegram channels: congratulations — your hoarding has now sent the address list out into the internet. Enjoy staying accountable. The files are now circulating online. A data package with 142 entries demonstrated the ability to read profiles and even delete accounts. The operators did not respond, the application disappeared from the web within hours, yet the site continues to be operated. A project that promised revenge and transparency delivered self-exposure.

The technical reality is brutal: those who build platforms for anonymous denunciation bear a double responsibility — toward the accused and toward the accusers. Faulty implementations turn denunciators into victims. Email addresses, phone numbers, perhaps even professional details — all can fall into the wrong hands. This is not an abstract risk: for doctors, teachers or officials the mere threat of public exposure can endanger livelihoods, careers and safety.

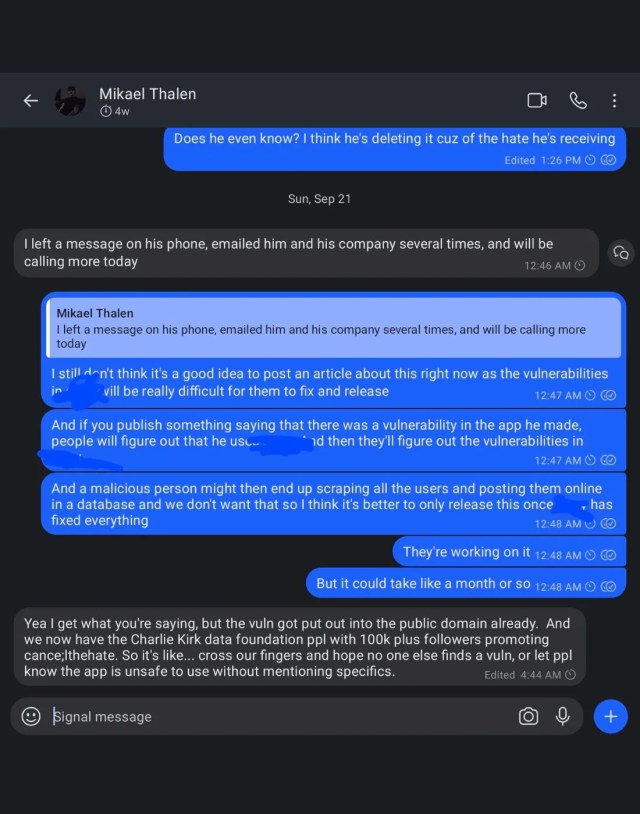

A leak in our possession reveals how the crisis was discussed internally: in a conversation dated September 21 the message is explicit — they had left messages on his phone, emailed him and his company several times, and did not want to publish details immediately because the vulnerabilities would be “very hard to fix.” The same protocol excerpt also admits that the flaw had already been “carried into the public” and that groups with large reach were promoting the app. In short: the internal communication clearly documents that the team knew the danger but deliberately chose to wait for fixes — a calculus that accepted ongoing endangerment of users instead of warning them immediately.

Both cases — Covenant Eyes and Cancel the Hate — are linked by the same logic: control as virtue, disclosure as remedy, acceleration as principle. Where religious morality meets tech, an ecosystem emerges that not only enforces norms but stabilizes power structures. Responsible actors call for less state intervention while at the same time building digital infrastructures that institutionalize private surveillance. And because such projects are often spun up quickly by activist networks and funded by donations, they lack fundamental care: security audits, data protection reviews and legal vetting.

The result is a threefold risk: first, a moral risk — the normalization of shame as a control mechanism; second, a technical risk — real vulnerabilities that expose users; third, a societal risk — the creation of organizations and networks that pursue political mobilization via private instruments. Jason Sheppard, the founder behind Cancel the Hate, is no anonymous tinkerer; our research documents his prior activities and ties within conservative circles. That does not automatically render the matter criminal in a legal sense — but it does make it politically charged and susceptible to abuse of power.

What does this mean in practical terms? Three demands that go beyond moralizing: First, every platform that enables anonymous reporting must undergo mandatory security and data protection audits by independent third parties before going live. Second, operators of such services must disclose funding, moderation rules and lines of responsibility — transparency is not a bonus, it is a condition. Third, legal regulations must clarify how denunciation data are handled — who stores what, for how long, and with what deletion guarantees.

And for those who may be affected: check your digital visibility, protect accounts with strong passwords and two-factor authentication, and seek legal advice if you believe you are the target of a coordinated campaign. For journalists and security experts the rule is clear: exposing vulnerabilities is necessary, but acting responsibly is essential — sensitive data must never be treated as trophies.

In the end the lesson is bitter but simple: those who impose purity on others must not enter the digital arena unarmed. The moral purity front has chosen two technologies as weapons in the 21st century — the surveillance of the self and the denunciation of others. Together they produce not purification but vulnerability. Those seeking real solutions should not rely on apps that promise moral efficiency, but on institutions that guarantee the rule of law, data protection and independent oversight.

Covenant Eyes may aim to save families. Cancel the Hate may promise justice. But both teach the same lesson: purity achieved without safeguards quickly reveals itself as humiliation — and too often as a danger, the purity culture of right-wing extremism — at a time when we believed such a thing impossible. Resist, stand up against it, we all have children or people for whom we wish a good future, because, in 2024/2025 there were renewed reports in Germany about reactivations or newly launched AfD “info portals” in individual states (for example, the reactivation / relaunch under the name “Neutral Teacher” or similar sites), to which ministries of education and teachers’ unions again responded with warnings. A “party” - out of pure journalistic duty we call it that, which does not reflect our opinion - like the AfD must never come to power — therefore it is worth standing up, contributing, supporting and being resolute.

Investigative journalism requires courage, conviction – and your support.

Please support our journalistic fight against right-wing populism and human rights violations. We do not want to finance our work through a paywall, so that everyone can read our investigations – regardless of income or background.

Wenn ich das richtig verstehe, sind die Nutzer doch die digitalen Blockwarte? Kein Mitleid.

richtig, natürlich kein Mitleid, hier geht es darum aufzuzeigen, wie gestört das ganze ist

Eine Welt die einfach darauf aus ist, sich selbst an den Pranger zu stellen. Das ist schlimm.

…da kann ich leider nur zustimmen

Im 3. Reich die Gestapo, in Russland der KGB (der sehr intensiv seine eigenen Bürger ausspionierte), in der DDR die Stasi mit ihren tausenden Informanten.

Keiner war sicher.

Ob in der Familie, im Freundeskreis oder gegenüber Arbeitskollegen.

Jede Kritik konnte eine Verhaftung nach sich ziehen.

Jetzt geht es digital.

Noch einfacher, mit noch mehr Schaden.

Ich glaube, dass der Trend in den USA zu zweit oder dritt Handys geht.

Egal was behauptet wird.

Keiner lässt sein Internetverhalten wirklich von anderen überwachen.